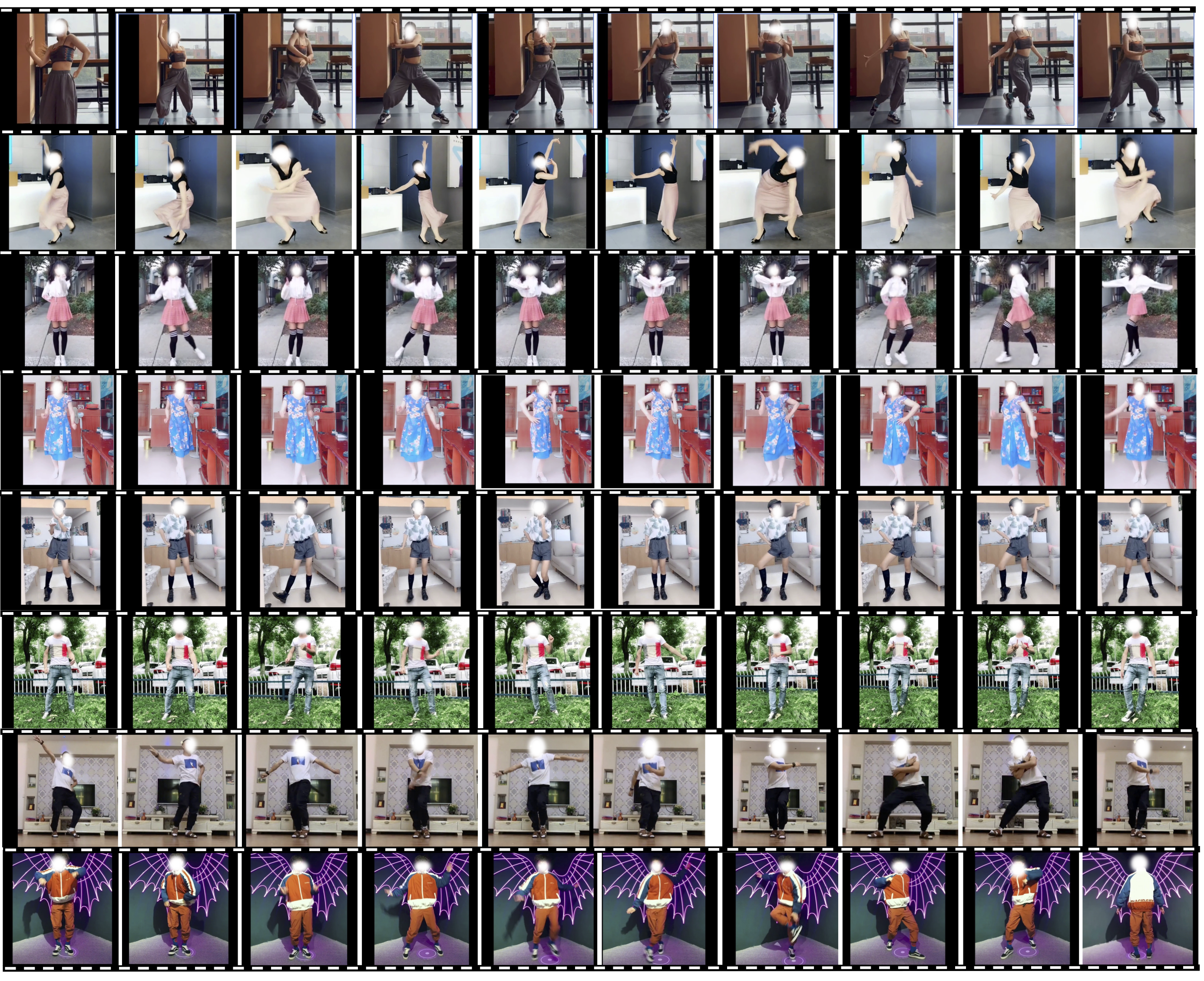

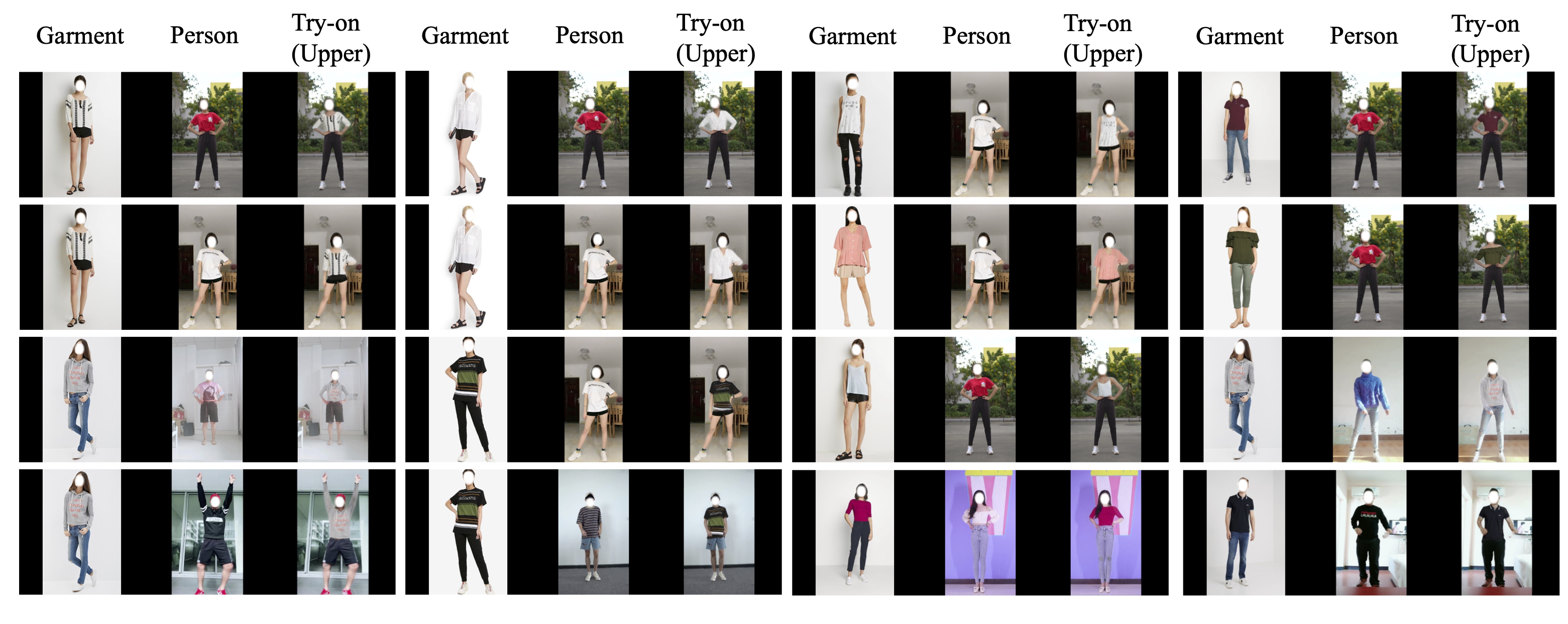

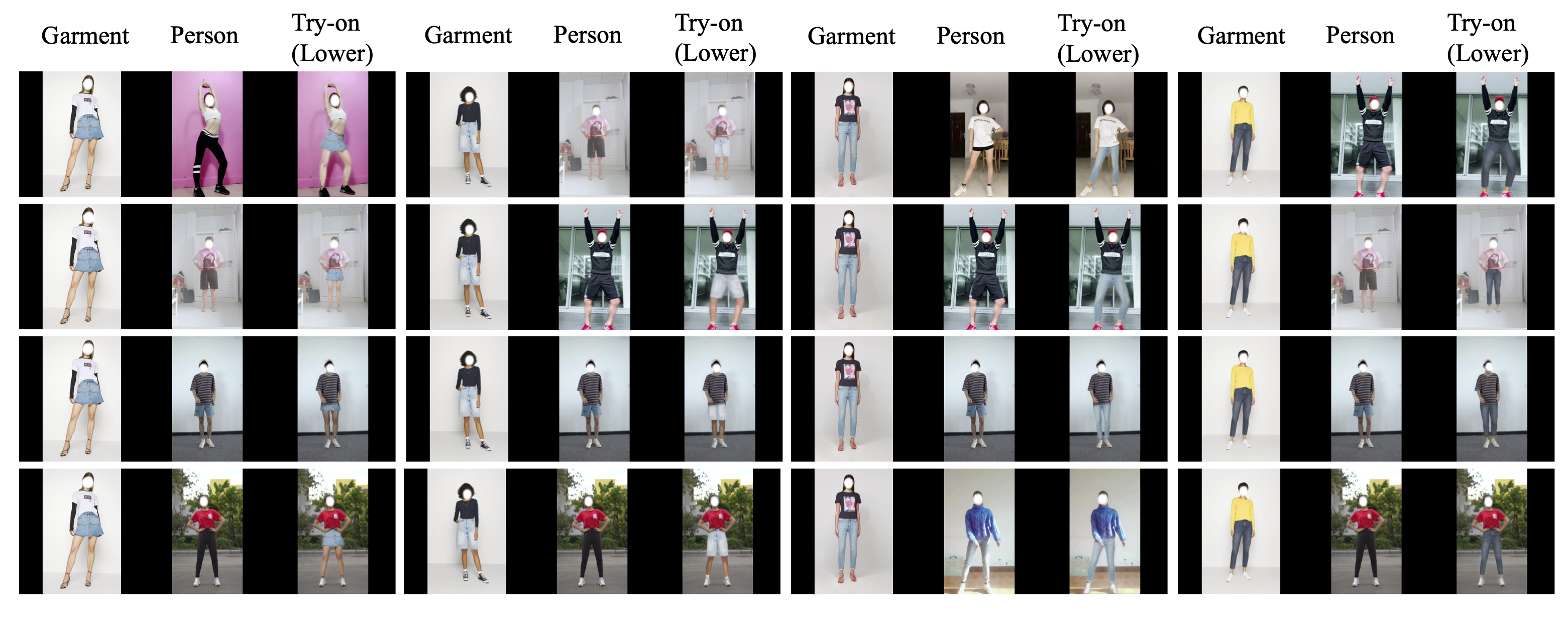

While significant progress has been made in garment transfer, one of the most applicable directions of human-centric image generation, existing works overlook the in-the-wild imagery, presenting severe garment-person misalignment as well as noticeable degradation in fine texture details. This paper, therefore, attends to virtual try-on in real-world scenes and brings essential improvements in authenticity and naturalness especially for loose garment (e.g., skirts, formal dresses), challenging poses (e.g., cross arms, bent legs), and cluttered backgrounds. Specifically, we find that the pixel flow excels at handling loose garments whereas the vertex flow is preferred for hard poses, and by combining their advantages we propose a novel generative network called wFlow that can effectively push up garment transfer to in-the-wild context. Moreover, former approaches require paired images for training. Instead, we cut down the laboriousness by working on a newly constructed large-scale video dataset named Dance50k with self-supervised cross-frame training and an online cycle optimization. The proposed Dance50k can boost real-world virtual dressing by covering a wide variety of garments under dancing poses. Extensive experiments demonstrate the superiority of our wFlow in generating realistic garment transfer results for in-the-wild images without resorting to expensive paired datasets.

We learn realistic garment transfer by leveraging a collection of real world dance videos scraped from public internet. The dataset, named Dance50k, contains 50,000 single-person dance sequences (about 15s duration) that feature varying poses and numerous garment types. For each video, we uniformly sample 10 frames and get a combination of \(C_{10}^2\) candidate \((I^s,I^t)\) pairs to form the training and testing pairs. Besides, a simple assertion is further applied on the candidates to ensure that the number of joints obtained from Openpose in \(I^s\) is more than that in \(I^t\). Finally, we get a split of 949,626/15,815 training/testing image pairs respectively.

Download Dance5k Dataset:

The dataset can be downloaded from here. The dataset resolution is: 512 x 512

Please concate us if you have any question about dataset or our paper. (dongxin.1016@bytedance.com or xiezhenyu.xiezhy@bytedance.com)

Licence:

@InProceedings{dong2022wflow,

title={Dressing in the Wild by Watching Dance Videos},

author={Xin Dong and Fuwei Zhao and Zhenyu Xie and Xijin Zhang and Kang Du and Min Zheng and Xiang Long and Xiaodan

Liang and Jianchao Yang1},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2022}

}